My first Zapier Zap: Send emails via Gmail when Google Sheets rows are updated (part 2)

You're reading part 2 of My first Zapier Zap: Send emails via Gmail when Google Sheets rows are updated series, where I built an automation that send emails via Gmail when Google Sheets rows are updated:

-

Check out Part 1 to see how I built and publish this automation using Zapier Zap with the Zap editor.

Once I got my first Zap working I wanted to try doing the same thing in Python using Google APIs.

In Zapier, I noticed that the trigger I chose was

specified as instant. I

wondered how Zapier managed to detect spreadsheet changes so fast.

My first thought was to look for push notifications in the Google

Sheets API. There isn't.

Google Sheets API doesn't offer such a service.

Then, I remembered that when I authenticated and authorized Zapier for triggering on row changes, I had to give access not just to Google Sheets, but also to Google Drive. Now I understood why. The Google Drive API does offer push notifications. I suspect this is how Zapier gets notified instantly.

But to start, I wanted to stick with just the Google Sheets API and Gmail API.

So I opted for a polling script that checks the spreadsheet for changes from time to time.

I ended up writing the sheets2gmail_polling.py script, which works like this:

-

Every

POLLING_INTERVALseconds, it retrieves the rows inSHEET_NAMEfromSPREADSHEET_ID(the string between/d/and/editin the URL likehttps://docs.google.com/spreadsheets/d/<id>/edit). -

Then, it checks which rows are new or have been updated by comparing their hashes (using the

row_hashfunction) to the ones stored in thecached_rowstable of thecache.sqlite3database. This is done bycheck_rows. -

Then, for every new or updated row found, it updates the cache in an atomic operation (updating

cached_rowsand queuing emails to be sent in thepending_emailstable). This is done byupdate_cache. -

Finally, it sends all the queued emails in

pending_emails. If an email fails to send three times, it won't try to send it again when the script detects updates or is restarted. This is done bysend_emails.

Here's the

main function:

def main():

conn = setup_cache_db(CACHE_DB_FILE)

creds = credentials()

# In case the program previously stopped before sending all the emails

send_emails(conn, creds, FROM_ADDR)

while True:

rows = get_rows(creds, SPREADSHEET_ID, SHEET_NAME)

if not rows or len(rows) == 1:

logger.info(f"No data in spreadsheet {SPREADSHEET_ID}, range {SHEET_NAME}")

else:

new_updated_rows = check_rows(conn, rows)

update_cache(conn, new_updated_rows, len(rows))

send_emails(conn, creds, FROM_ADDR)

time.sleep(POLLING_INTERVAL)

conn.close()Setting up the sheets2gmail project in the Google Cloud console

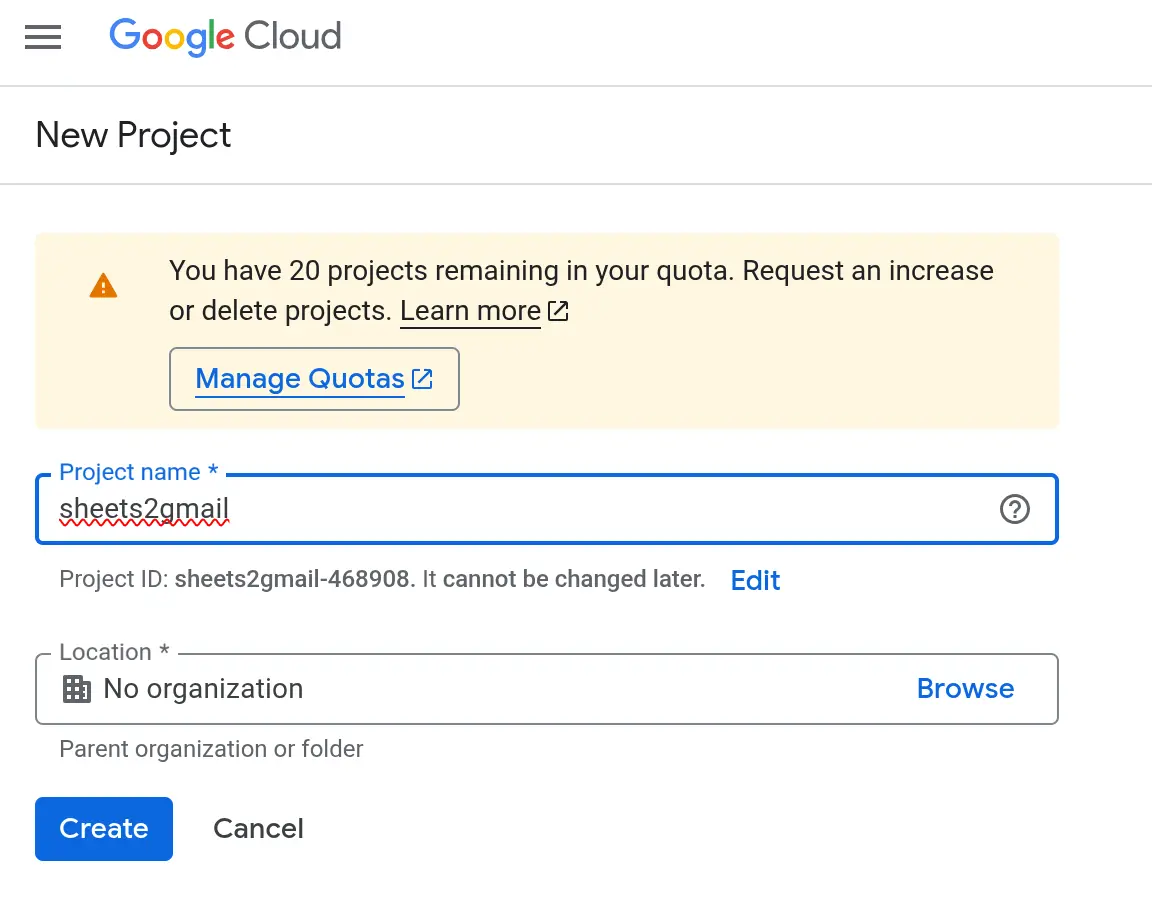

In the Google Cloud console:

-

I created the project

sheets2gmail:

-

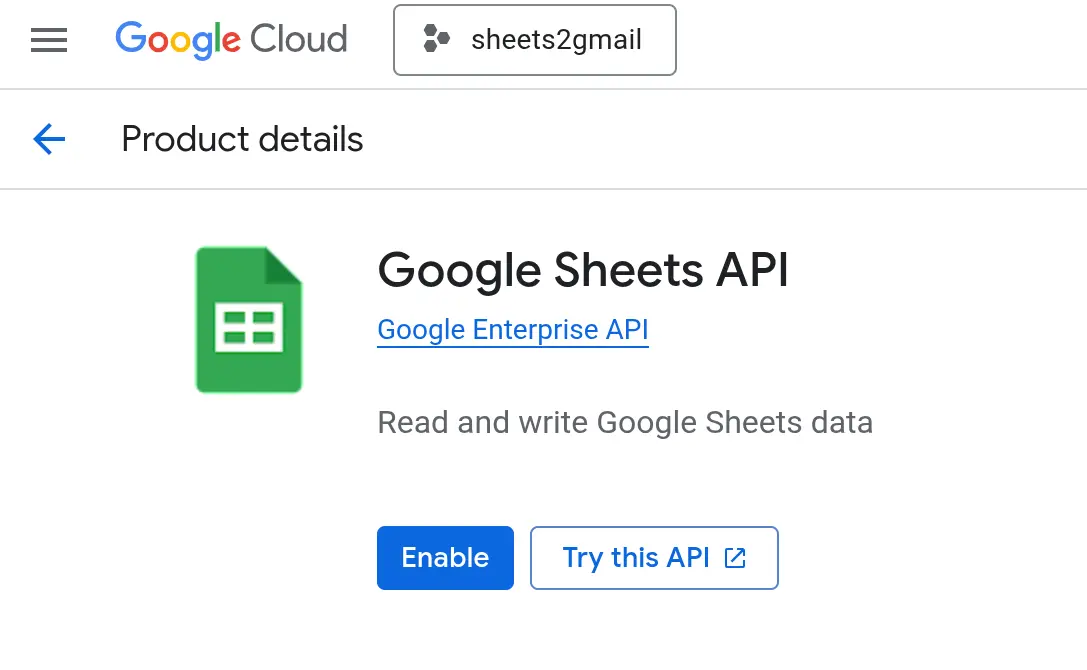

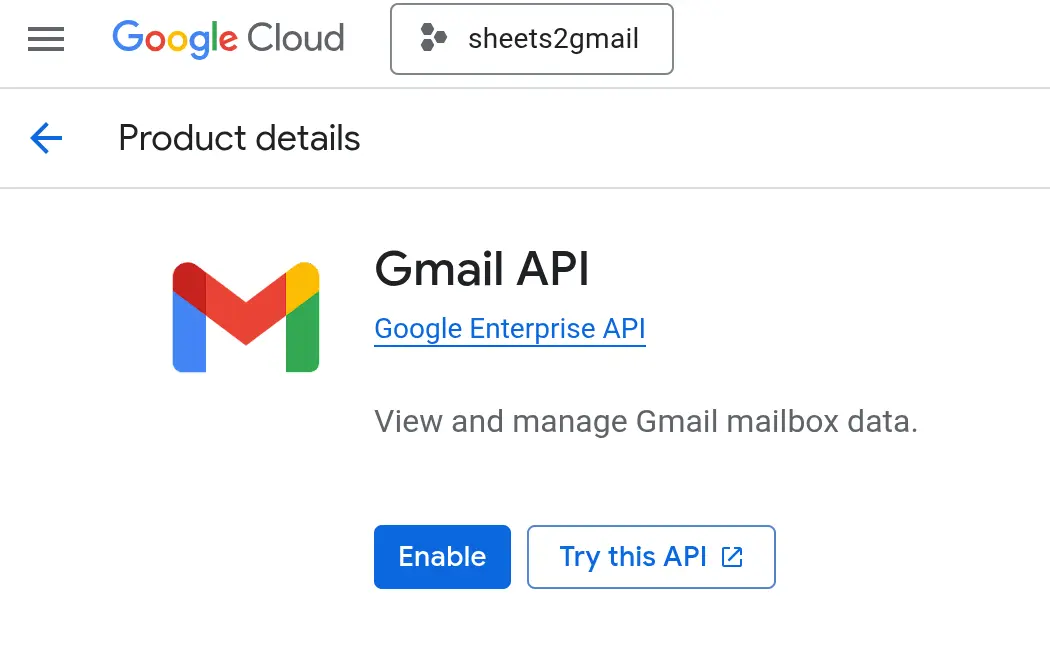

In that project, I enabled both the Google Sheets API and the Gmail API:

-

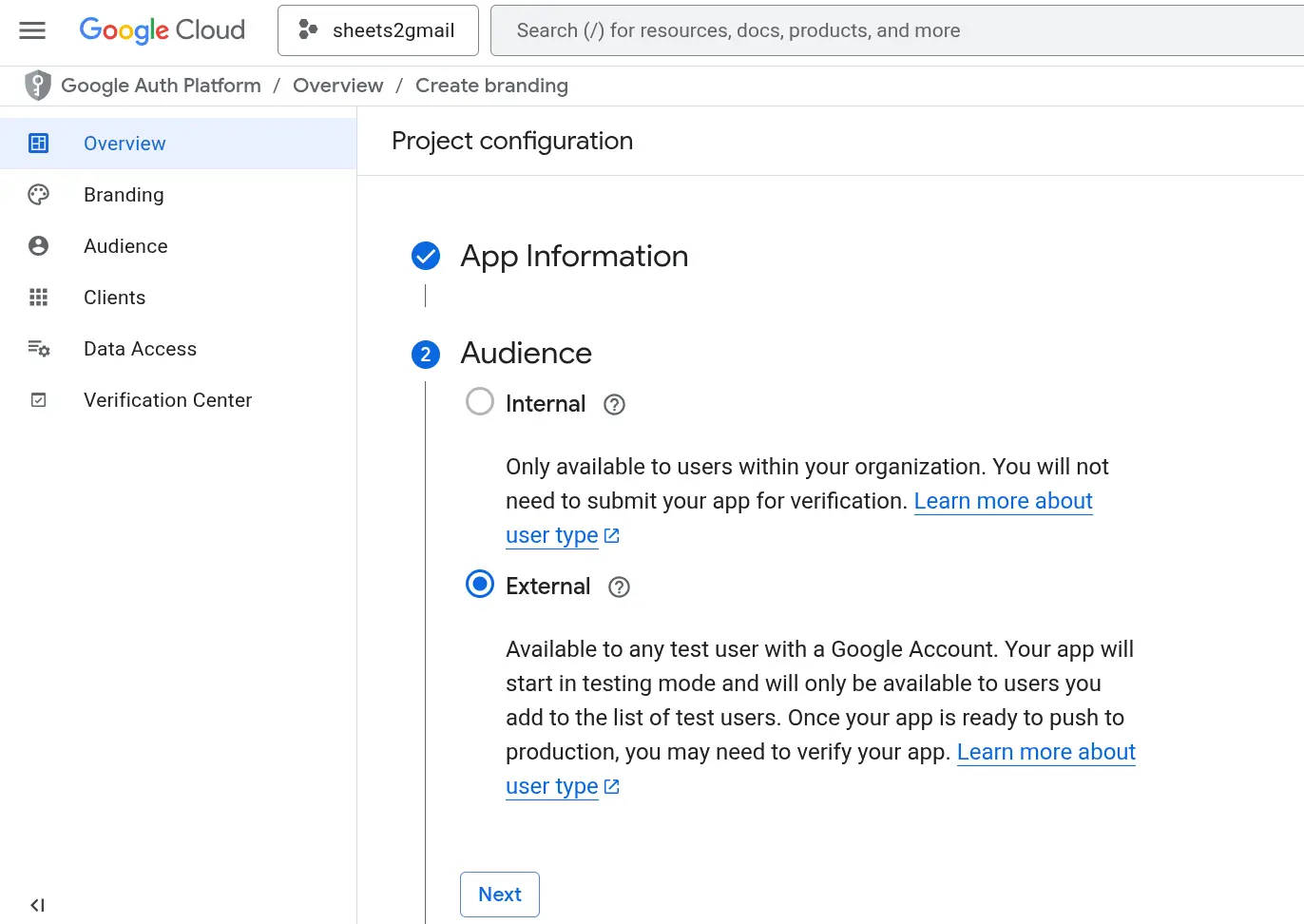

I set up the OAuth consent screen selecting the "External" audience:

-

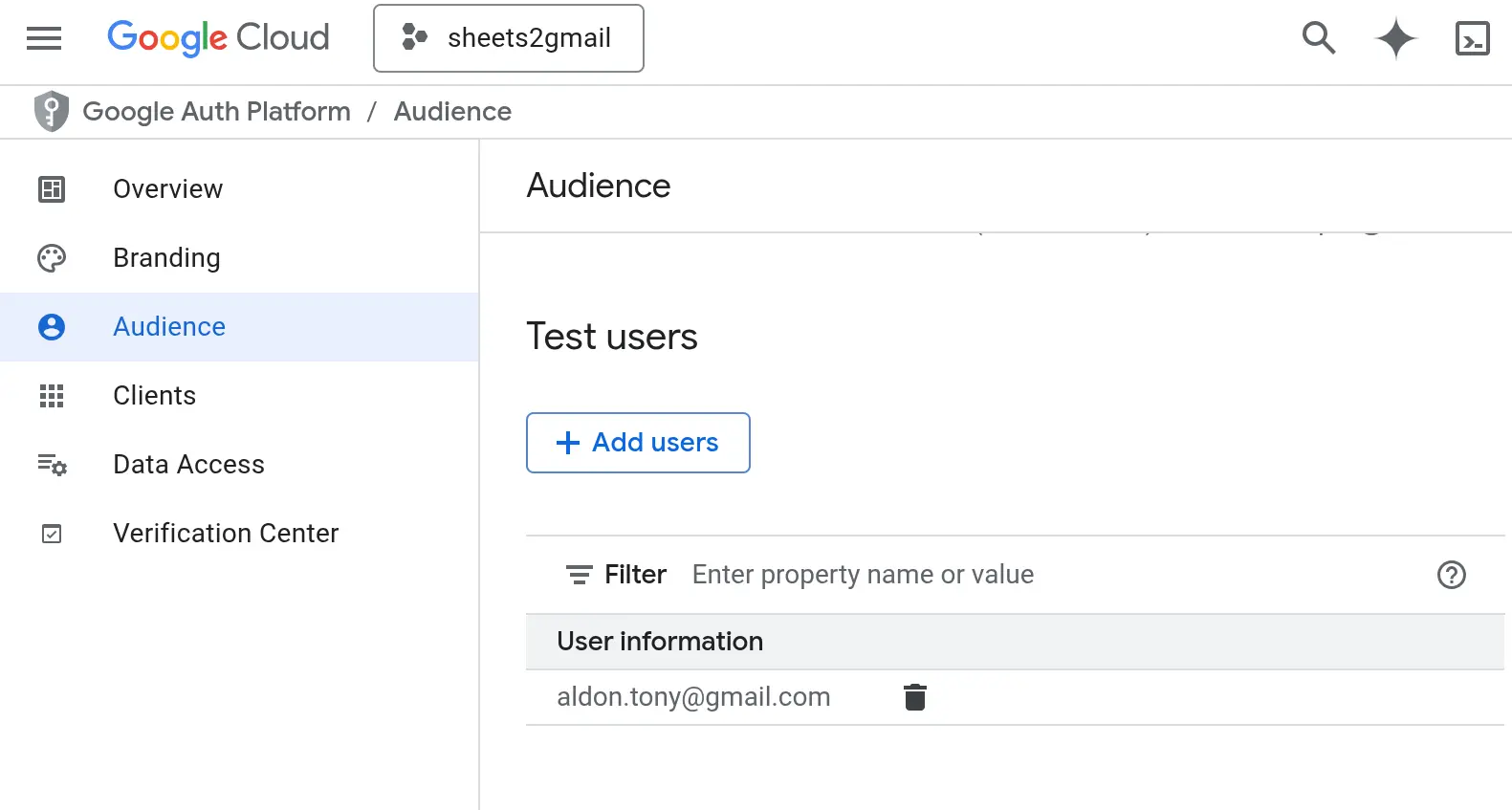

I added myself as a test user:

-

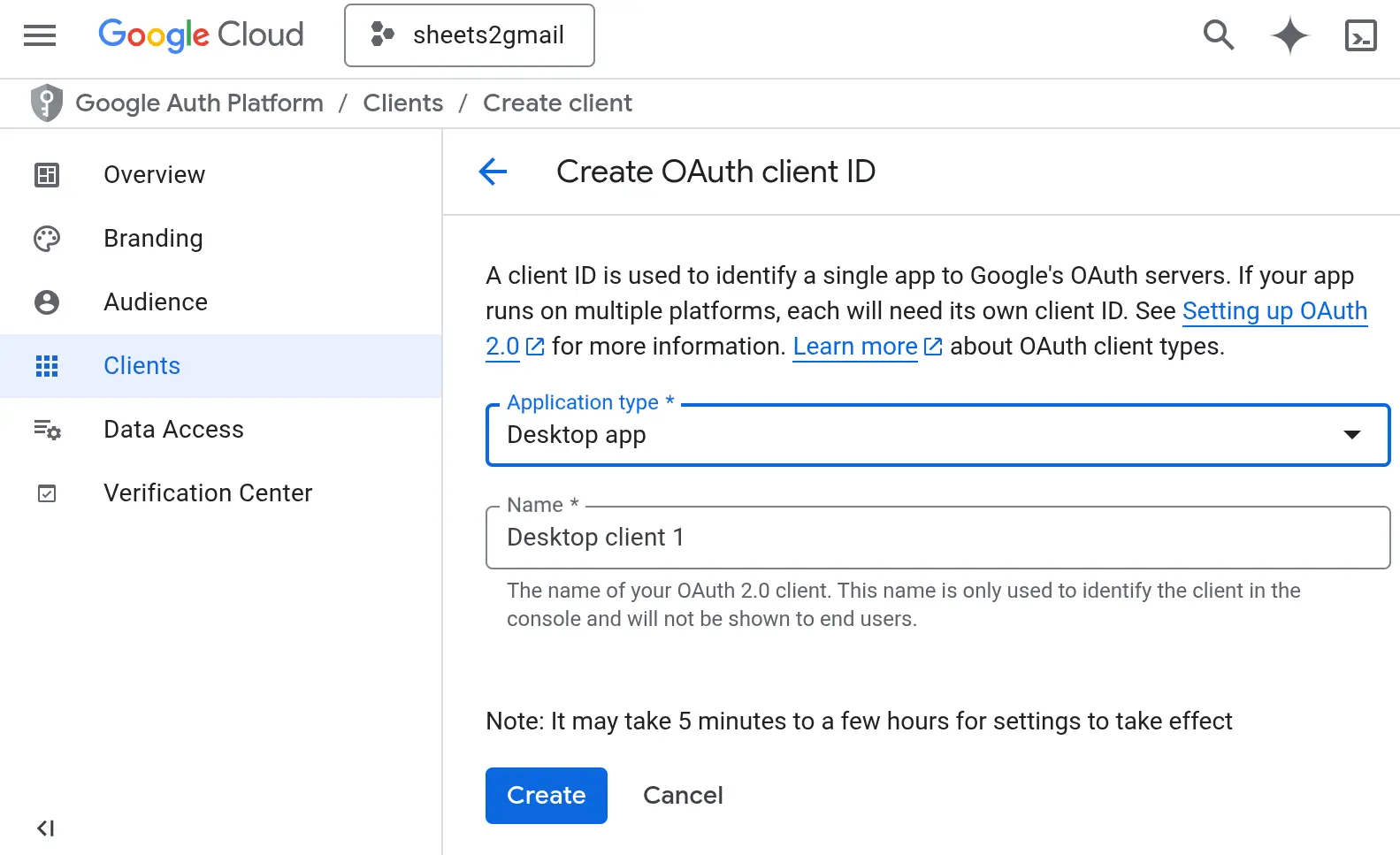

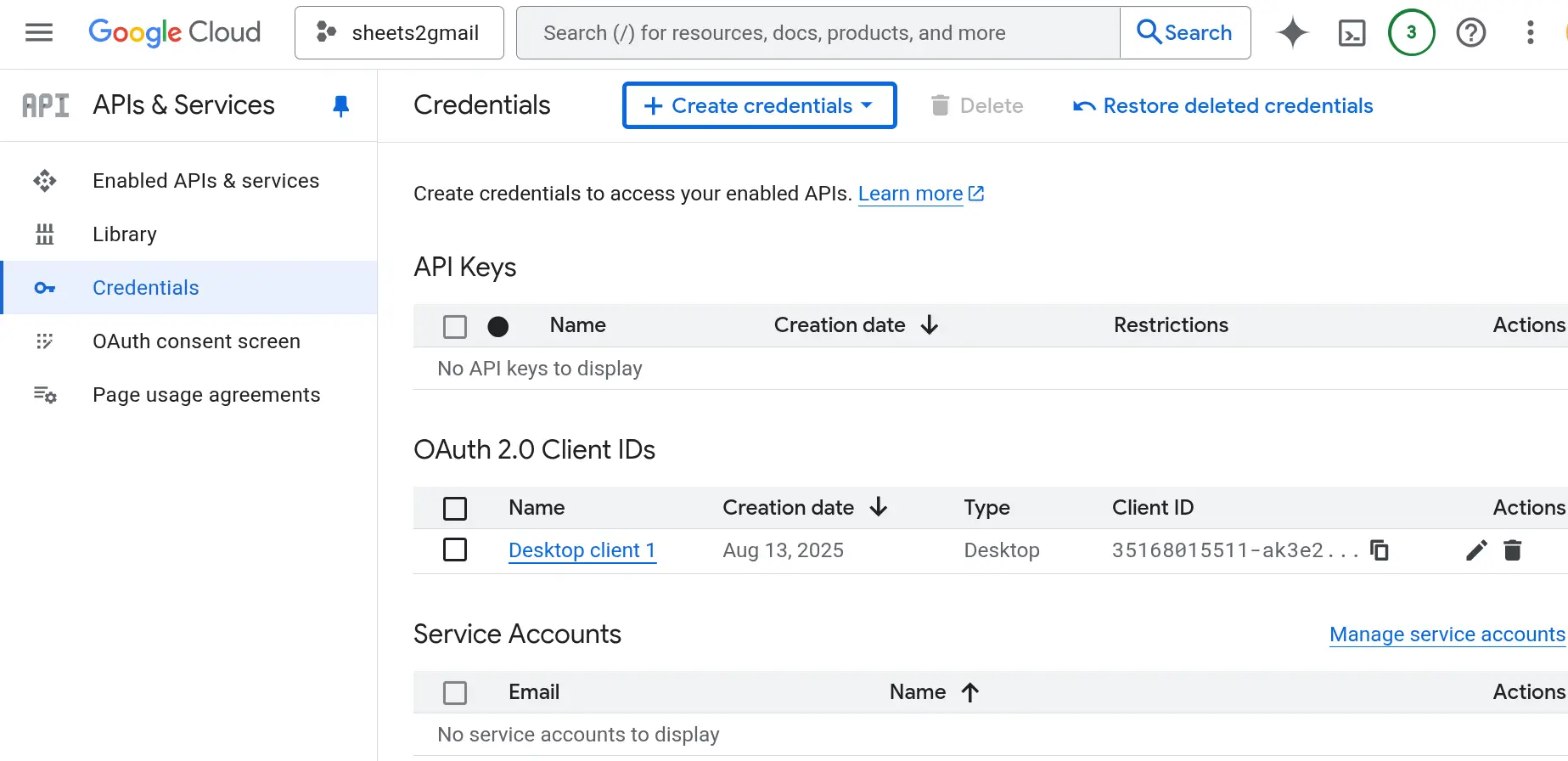

Then I created an OAuth client ID, downloaded it as a JSON file, and saved it my project directory as

credentials.json(right wheresheets2gmail_polling.pylives).

Note about the credentials

The credentials.json I

got after creating the OAuth client ID looks like this:

{

"installed": {

"client_id": "<some-id>.apps.googleusercontent.com",

"project_id": "sheets2gmail-468908",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_secret": "...",

"redirect_uris": [

"http://localhost"

]

}

}

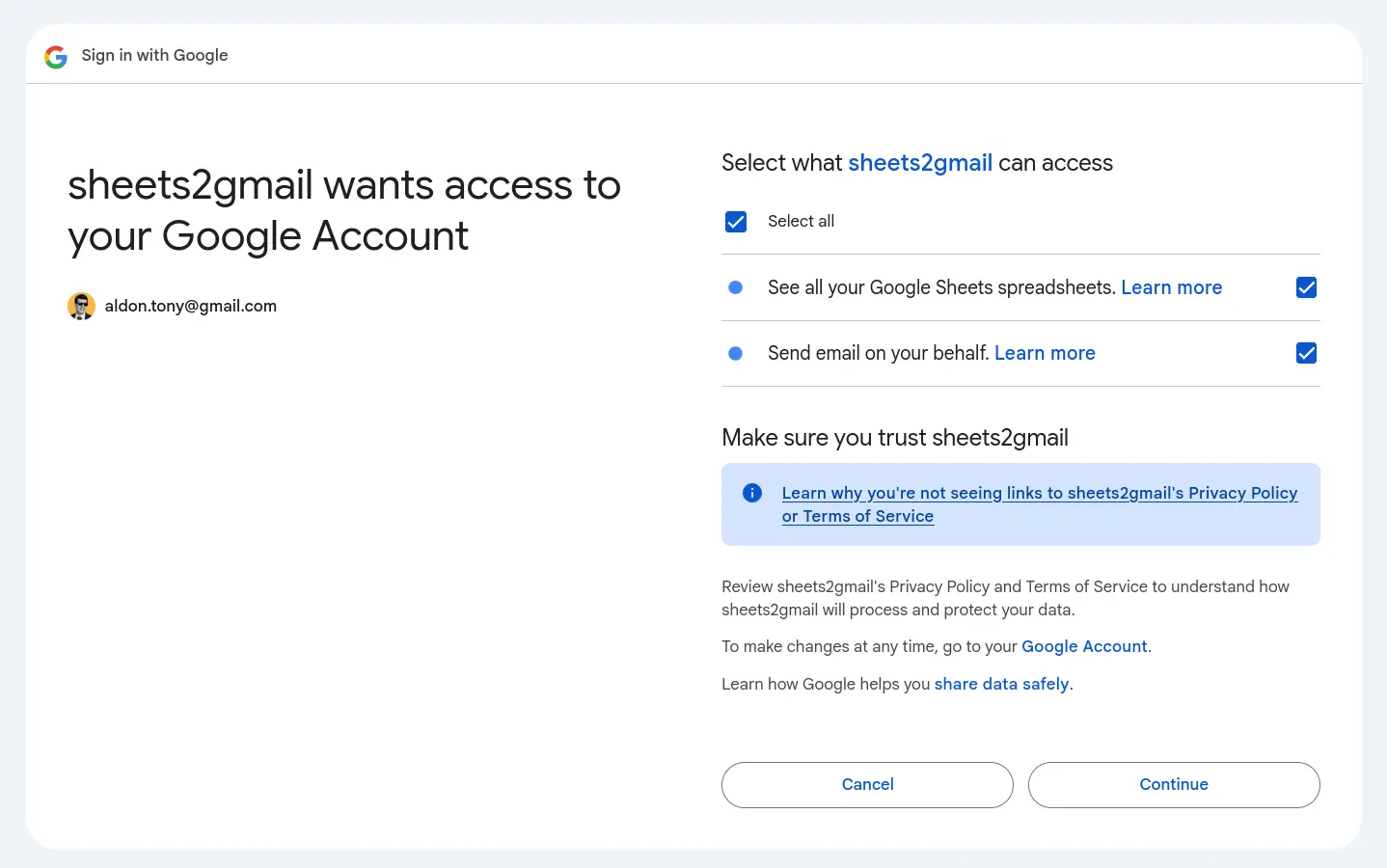

The first time I ran

sheets2gmail_polling.py, it prompted me to authenticate and approve access for the

Google Sheets and Gmail APIs (according to the scopes in the

SCOPES variable):

SCOPES = ["https://www.googleapis.com/auth/spreadsheets.readonly",

"https://www.googleapis.com/auth/gmail.send"]

After authenticating, the script created a

token.json file. This

allows the script to reuse the credentials without prompting me

again, even after restarting.

The contents look something like this:

{

"token": "...",

"refresh_token": "...",

"token_uri": "https://oauth2.googleapis.com/token",

"client_id": "<some-id>.apps.googleusercontent.com",

"client_secret": "...",

"scopes": [

"https://www.googleapis.com/auth/spreadsheets.readonly",

"https://www.googleapis.com/auth/gmail.send"

],

"universe_domain": "googleapis.com",

"account": "",

"expiry": "2025-08-11T10:19:20Z"

}sheets2gmail_polling.py

If you want to try the script yourself:

-

Don't forget to create your own

credentials.json, as described above. -

Update these variables:

SPREADSHEET_ID,SHEET_NAME,FROM_ADDR. -

Optionally, adjust

POLLING_INTERVALif you want it to check more or less frequently.

$ uv init

$ uv add google-api-python-client google-auth-httplib2 google-auth-oauthlib

$ uv run sheets2gmail_polling.py# sheets2gmail_polling.py

import os.path

import hashlib

import sqlite3

import logging

import time

import base64

from email.message import EmailMessage

from google.auth.transport.requests import Request

from google.oauth2.credentials import Credentials

from google_auth_oauthlib.flow import InstalledAppFlow

from googleapiclient.discovery import build

from googleapiclient.errors import HttpError

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("sheets2gmail-polling")

# If modifying these scopes, delete the file token.json.

SCOPES = ["https://www.googleapis.com/auth/spreadsheets.readonly",

"https://www.googleapis.com/auth/gmail.send"]

CACHE_DB_FILE = "cache.sqlite3"

POLLING_INTERVAL = 60

SPREADSHEET_ID = "1EpC3YACK-OzTJzoonr-GRS-bEnxM5Qg9bDH9ltQFT3c"

SHEET_NAME = "Sheet1"

FROM_ADDR = "aldon.tony@gmail.com"

def credentials():

"""Get credentials from token.json file.

If not present or invalide, authenticate first using credentials.json file.

See https://developers.google.com/workspace/sheets/api/quickstart/python."""

creds = None

# The file token.json stores the user's access and refresh tokens,

# and is created automatically when the authorization flow completes

# for the first time.

if os.path.exists("token.json"):

creds = Credentials.from_authorized_user_file("token.json", SCOPES)

# If there are no (valid) credentials available, let the user log in.

if not creds or not creds.valid:

if creds and creds.expired and creds.refresh_token:

creds.refresh(Request())

else:

flow = InstalledAppFlow.from_client_secrets_file(

"credentials.json", SCOPES

)

creds = flow.run_local_server(port=0)

# Save the credentials for the next run

with open("token.json", "w") as token:

token.write(creds.to_json())

return creds

def hash_row(row):

"""Return a SHA256 hexdigest of `row`."""

s = '|'.join(str(x) for x in row)

return hashlib.sha256(s.encode()).hexdigest()

def get_rows(creds, spreadsheet_id, sheet_range):

"""Return list of rows in `sheet_range` of `spreadsheet_id`."""

with build("sheets", "v4", credentials=creds) as service:

response = (

service.spreadsheets()

.values()

.get(spreadsheetId=spreadsheet_id, range=sheet_range)

.execute()

)

return response.get("values")

def setup_cache_db(dbfile):

conn = sqlite3.connect(dbfile)

c = conn.cursor()

c.execute('''

CREATE TABLE IF NOT EXISTS cached_rows (

row_index INTEGER PRIMARY KEY,

row_hash TEXT

)

''')

c.execute('''

CREATE TABLE IF NOT EXISTS pending_emails (

id INTEGER PRIMARY KEY AUTOINCREMENT,

email TEXT,

note TEXT,

fail_count INTEGER DEFAULT 0

)

''')

conn.commit()

return conn

def check_rows(conn, rows):

"""Return the list of new or updated rows.

Elements are tuples (<state>, row_index, row_hash, row)

where <state> is \"new\" or \"updated\"."""

c = conn.cursor()

new_updated_rows = []

for idx, row in enumerate(rows[1:]):

# +1 because we don't pass the first row (column names) in rows

# +1 because Google Sheets start counting at 1

idx_sheet = idx + 2

h = hash_row(row)

c.execute("SELECT row_hash FROM cached_rows WHERE row_index=?", (idx_sheet,))

result = c.fetchone()

if result is None:

logger.info(f"Row {idx_sheet} is new: {row}")

new_updated_rows.append(("new", idx_sheet, h, row))

elif result[0] != h:

logger.info(f"Row {idx_sheet} has been updated: {row}")

new_updated_rows.append(("updated", idx_sheet, h, row))

logger.info(f"{len(new_updated_rows)} row(s) new/updated.")

return new_updated_rows

def update_cache(conn, new_updated_rows, sheet_row_count):

"""Update cached_rows table in `conn` listed in `new_updated_rows`.

Add `new_updated_rows` in pending_emails table in `conn`."""

c = conn.cursor()

for state, idx, h, row in new_updated_rows:

if state == "new":

c.execute("INSERT INTO cached_rows (row_index, row_hash) VALUES (?, ?)", (idx, h))

else:

c.execute("UPDATE cached_rows SET row_hash=? WHERE row_index=?", (h, idx))

if len(row) < 2 or row[1] == "":

logger.error(f"No email provided in row {idx}: {row}")

continue

if len(row) < 3 or row[2] == "":

logger.error(f"No note provided in row {idx}: {row}")

continue

c.execute("INSERT INTO pending_emails (email, note) VALUES (?, ?)", (row[1], row[2]))

c.execute("DELETE FROM cached_rows WHERE row_index > ?", (sheet_row_count,))

conn.commit()

def send_email(creds, from_addr, to, content):

"""Send email to `to` with content `content`."""

service = build("gmail", "v1", credentials=creds)

message = EmailMessage()

message["From"] = from_addr

message["To"] = to

message["Subject"] = "Automation Test"

message.set_content(content)

encoded_message = base64.urlsafe_b64encode(message.as_bytes()).decode()

body = {"raw": encoded_message}

send_message = (

service.users()

.messages()

.send(userId="me", body=body)

.execute()

)

return send_message["id"]

def send_emails(conn, creds, from_addr):

"""Send emails listed in pending_emails table in `conn`."""

c = conn.cursor()

c.execute("SELECT id, email, note FROM pending_emails WHERE fail_count < 3")

pending_emails = c.fetchall()

for email_id, to, content in pending_emails:

try:

msg_id = send_email(creds, from_addr, to, content)

logger.info(f"Email {msg_id} sent to {to}.")

c.execute("DELETE FROM pending_emails WHERE id = ?", (email_id,))

conn.commit()

except Exception as e:

logger.error(f"Failed to send to '{to}': {e}")

c.execute("UPDATE pending_emails SET fail_count = fail_count + 1 WHERE id = ?", (email_id,))

conn.commit()

c.execute("SELECT id, email, note FROM pending_emails WHERE fail_count = 3")

dead_emails = c.fetchall()

if dead_emails:

logger.warning(f"Some emails have failed to send after 3 attempts and remain in the `pending_emails` queue: {dead_emails}")

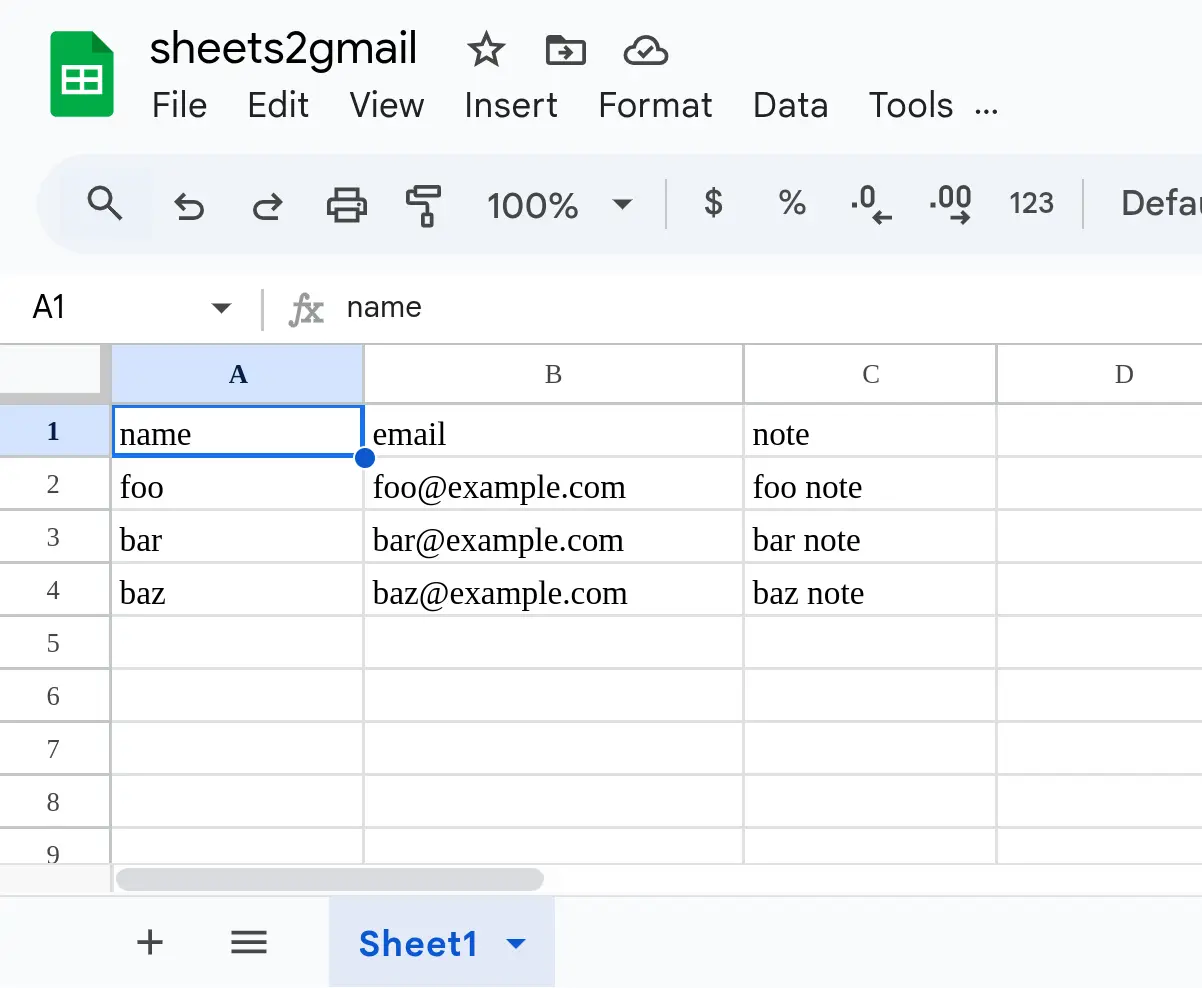

def main():

"""Track changes in `SPREADSHEET_ID`, specifically the sheet `SHEET_NAME`.

Each time a row in `SHEET_NAME` is updated, added or removed,

we send an email from `FROM_ADDR` to row[1] address with

row[2] as content. The subject is hardcoded to 'Automation Test'.

`SHEET_NAME` sheet must have the following shape:

name | email | note

foo | foo@example.com | foo note

bar | bar@example.com | bar note

baz | baz@example.com | baz note"""

conn = setup_cache_db(CACHE_DB_FILE)

creds = credentials()

# In case the program previously stopped before sending all the emails

send_emails(conn, creds, FROM_ADDR)

while True:

rows = get_rows(creds, SPREADSHEET_ID, SHEET_NAME)

if not rows or len(rows) == 1:

logger.info(f"No data in spreadsheet {SPREADSHEET_ID}, range {SHEET_NAME}")

else:

new_updated_rows = check_rows(conn, rows)

update_cache(conn, new_updated_rows, len(rows))

send_emails(conn, creds, FROM_ADDR)

time.sleep(POLLING_INTERVAL)

conn.close()

if __name__ == "__main__":

main()Getting an email by its message id

When an email is sent, the script logs its message ID like this:

INFO:sheets2gmail-polling:Email 1989d239d2c9ea33 sent to aldon.tony@gmail.com.

You can fetch the contents of a sent email by its ID using the

Gmail API, but you'll need to include the scope

"https://www.googleapis.com/auth/gmail.readonly".

For instance, for the message ID

1989d239d2c9ea33:

SCOPES = ["https://www.googleapis.com/auth/gmail.readonly"]

creds = credentials()

service = build("gmail", "v1", credentials=creds)

message = (

service.users()

.messages()

.get(userId="me", id="1989d239d2c9ea33")

.execute()

)

message

# {'id': '1989d239d2c9ea33', 'threadId': '1989d239d2c9ea33', 'labelIds': ['UNREAD', 'SENT', 'INBOX'], 'snippet': 'foo', 'payload': {'partId': '', 'mimeType': 'text/plain', 'filename': '', 'headers': [{'name': 'Received', 'value': 'from 636107077520 named unknown by gmailapi.google.com with HTTPREST; Tue, 12 Aug 2025 00:16:53 -0700'}, {'name': 'Received', 'value': 'from 636107077520 named unknown by gmailapi.google.com with HTTPREST; Tue, 12 Aug 2025 00:16:53 -0700'}, {'name': 'From', 'value': 'aldon.tony@gmail.com'}, {'name': 'To', 'value': 'aldon.tony@gmail.com'}, {'name': 'Subject', 'value': 'Automation Test'}, {'name': 'Content-Type', 'value': 'text/plain; charset="utf-8"'}, {'name': 'Content-Transfer-Encoding', 'value': '7bit'}, {'name': 'MIME-Version', 'value': '1.0'}, {'name': 'Date', 'value': 'Tue, 12 Aug 2025 00:16:53 -0700'}, {'name': 'Message-Id', 'value': '<CABiT0JALXNkSsGqetEO9OckoALcW4MD6tsS9nPEeeC4MwFGVFA@mail.gmail.com>'}], 'body': {'size': 4, 'data': 'Zm9vCg=='}}, 'sizeEstimate': 544, 'historyId': '5510829', 'internalDate': '1754983013000'}Here's the JSON, pretty-printed for readability:

{

"id": "1989d239d2c9ea33",

"threadId": "1989d239d2c9ea33",

"labelIds": [

"UNREAD",

"SENT",

"INBOX"

],

"snippet": "foo",

"payload": {

"partId": "",

"mimeType": "text/plain",

"filename": "",

"headers": [

{

"name": "Received",

"value": "from 636107077520 named unknown by gmailapi.google.com with HTTPREST; Tue, 12 Aug 2025 00:16:53 -0700"

},

{

"name": "Received",

"value": "from 636107077520 named unknown by gmailapi.google.com with HTTPREST; Tue, 12 Aug 2025 00:16:53 -0700"

},

{

"name": "From",

"value": "aldon.tony@gmail.com"

},

{

"name": "To",

"value": "aldon.tony@gmail.com"

},

{

"name": "Subject",

"value": "Automation Test"

},

{

"name": "Content-Type",

"value": "text/plain; charset=\"utf-8\""

},

{

"name": "Content-Transfer-Encoding",

"value": "7bit"

},

{

"name": "MIME-Version",

"value": "1.0"

},

{

"name": "Date",

"value": "Tue, 12 Aug 2025 00:16:53 -0700"

},

{

"name": "Message-Id",

"value": "<CABiT0JALXNkSsGqetEO9OckoALcW4MD6tsS9nPEeeC4MwFGVFA@mail.gmail.com>"

}

],

"body": {

"size": 4,

"data": "Zm9vCg=="

}

},

"sizeEstimate": 544,

"historyId": "5510829",

"internalDate": "1754983013000"

}Missing features & advantages in Zapier (according to gpt-4.1)

While I was writing the

sheets2gmail_polling.py

script, I started noticing what was missing—and

how much extra work would be needed—to reach

the level of professional automation that Zapier offers.

Naturally, I got curious, so when I finished the script, I asked

gpt-4.1 the following:

What is missing in the Python script compared to a Zap in Zapier.com that makes Zapier preferable?

This was the absolutely expected answer I received:

-

Instant Triggers: Zapier can use Google Drive's push notifications for near real-time updates, whereas your script is limited to polling on an interval (e.g., every 60 seconds or longer), which may not catch changes as quickly.

-

Error Handling and Notification: Zapier provides user-friendly error messages, built-in alerts, and automatic retries. If a step fails (e.g., missing required email address), it explains the error and notifies you immediately.

-

Monitoring, Logging, and Troubleshooting: Detailed run history, centralized logs, and a troubleshooting UI are built-in. You can see what happened in each Zap run, including data passed between steps and the exact reason for failure. Your script just logs messages to a file/console.

-

No-Code Editing: The workflow can be edited, tested, and expanded with a few clicks—no need to edit code or redeploy.

-

Built-in Authentication, Token Management, and Security: Zapier manages OAuth tokens and keeps them secure, handling refresh and expiration automatically.

-

Scalability and Reliability: Zapier is a managed platform—no worries about your server going down, system restarts, or losing state during updates.

-

UI for Workflow Management: Easy to enable/disable Zaps, manage multiple automations, see a dashboard, get system updates, and more—all through a graphical interface.

References

-

Google Cloud Console

-

google-api-python-client

-

Google Sheets API

-

Gmail API

-

https://developers.google.com/workspace/gmail/api/quickstart/python

-

https://developers.google.com/workspace/gmail/api/reference/rest/

-

https://developers.google.com/workspace/gmail/api/reference/rest/v1/users.messages/get

-

https://developers.google.com/workspace/gmail/api/reference/rest/v1/users.messages/send

-

https://developers.google.com/workspace/gmail/api/guides/sending#python

-

-

Google Drive API

Built with one.el.